“The risk that matters most is the risk of permanent loss.” – Howard Marks

“When we speak of risk, we are not speaking of share price volatility. Share price volatility provides us with opportunity. When we speak of risk we are thinking about exposure to permanent loss of capital.” – Chuck Akre

I increasingly find most investment analysis is done backwards. There are two broad disciplines that are mandatory to generate superior returns over the long term: business analysis and security analysis. The former deals with questions like why does the business exist? What value does it give customers? Why do customers choose it over competitors? What are the reasons for why it’s a superior competitor? Why can’t that be imitated or competed away? What kind of returns does it generate? How much do they have to invest to generate said returns? And many more over a business cycle.

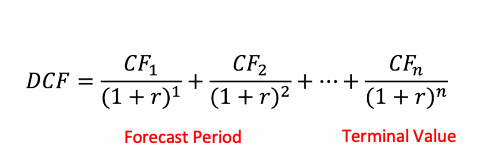

Notice I mentioned nothing about what the business is worth. That’s for security analysis. The latter sees investors routinely obsess over what PE ratio or EV to EBITDA multiple a stock trades at compared to others in futility. The more ambitious investor may try take a specific forecast in his mind of what the market is missing and put pen to paper in assessing what the business is worth. He may accelerate growth, make it more profitable, or some other factor over a forecast period to see if there is any value in the security’s current price. But then he must deal with forever and say that beyond the time of his forecast the business will continue to operate in some form of steady state, distributing cash flow.

Figure 1: A Basic DCF Model

Investors fixate over the forecastable period but investment returns in the long-run follow a type of power law distribution: 80% of the returns are driven by what investors tend to spend about 20% (if that) of their time thinking about, the essential quality of the business. They may try and look out two or three years thinking that surely within that time period the market will come around to their way of thinking on a stock. But all they are truly doing is hoping that the market’s propensity for animal spirits will break their way; they’re betting on randomness.

Any trend can grip a business for a year or two. Any fad can capture a market for a short period of time. Or something unexpected can arise and push profitability out further into the future. But competitive advantages, moats, superior ROIC, those all live in the final term, the terminal value. There’s no realistic way to get the number right. But that’s not the point. Anyone can make a model say anything. The terminal value sits there to represent forever. What you need to do though is avoid getting it wrong. That’s where real risk lies. That’s where capital gets permanently lost. And that judgment comes from business analysis. If a business is truly superior it will show up on a long-enough timeline, not some convenient forecastable period.

What does this have to do with semiconductors today? I’ll explain momentarily. First a recap of Part I and then some history.

In Part I we established:

- Moore’s Law has 3 components: every two years semiconductors would be able to double the number of transistors on a chip resulting in 2x the performance, half the energy consumption and at half the price

- The boom thanks to Moore’s Law saw computing move from the mainframe to the desk. Corporate managers, wanting to avoid the security risks and technical difficulties of everyone on their own devices, went to IBM to get a standard, corporate wide solution

- IBM, pressed for time, wasn’t able to design their own product and instead outsourced the CPU and Operating System pieces to two firms, Intel and Microsoft

- The propagation of Intel chips meant that their x86 Instructure Set Architecture (the language for how a processor interprets and executes instructions) wins out over competitors and becomes the central point of the software development universe. With its set of tools (compilers, frameworks and other machine code) software runs best on an Intel chip. Intel has its moat.

- Moore’s Law technically died around 2007. We are able to get more powerful and energy efficient chips by putting more cores on a single chipset but costs begin to skyrocket.

- At the same time, computing becomes even more continuous. It moves from the desktop into our pocket thanks to the birth of the smartphone market. Intel passes on manufacturing a processor for Apple’s first phone because, being outside the x86 ecosystem, the profit opportunity just isn’t there.

In 1987 the government of Taiwan recruited Morris Chang to come to the country in an effort to boost the nation’s high-tech sector. Chang had been a Vice President at Texas Instruments just a few years prior and oversaw their entire semiconductor business but got passed over for the CEO position. Chang had noticed that many designers of chips wanted to leave their current positions and pursue their own business ventures. The problem was that in those days the dominant wisdom was that a chip company needed its own manufacturing to deliver chips. There wasn’t enough excess capacity at firms that owned their own manufacturing (that also designed their own chips). If you were lucky enough to find capacity, you ran the risk of your Intellectual Property being stolen by your manufacturer (who doubled as your competitor) or suddenly having your capacity cut. Raising the capital to build a manufacturing fab was exorbitant and a major barrier to entry so designers stood pat. That’s why, as AMD’s then CEO stated, “Real men have fabs.” That’s where Chang stepped in.

He noticed that Taiwan didn’t have much tech expertise at all. The one thing they could do was manufacture and do so at a cost advantage. So, he created Taiwan Semiconductor Manufacturing (TSMC) with the sole focus being on manufacturing others designs and doing so as the low-cost leader. He dedicated the company to never compete with its customers.

Chang’s business model choice was revolutionary. The company wasn’t a tech leader nor the best manufacturer around (that was still Intel) but its role as a Switzerland of manufacturing and price advantages enticed customers. It gave birth to a whole new industry as the barriers to chip design came tumbling down. Firms like Qualcomm and Nvidia owe their existence, or at least their business model, in part to Chang and TSMC. The bundle between design and manufacturing was bursting; sales of chips from firms without their own manufacturing skyrocketed 400% over the ensuing decade.

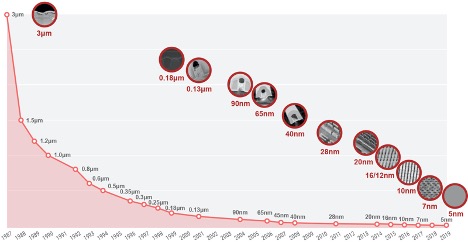

Chang’s low pricing was a strategy bent on market share. The more design firms that chose TSMC as their manufacturer the cheaper his costs got (on a per-unit basis). Crucially, it also provided greater scale by which to invest in R&D so that TSMC’s manufacturing process could improve and compete better with industry leaders. TSMC learned how to make chips smaller and thus more powerful, energy efficient and cheaper (the three stools of Moore’s Law). From one node to the next the company’s learning accelerated (Figure 2). The only factors that really slowed it down was the tech bust of the early 2000s and the Great Financial Crisis, which weighed down the entire ecosystem.

Figure 2: TSMC’s Process Technology Got Better Quickly

Keep in mind however that despite the enormous gains TSMC saw in its know-how it still played second-fiddle during just about this entire time. It was still behind Intel, the top dog in chip manufacturing. That’s because when it comes to manufacturing semiconductors knowledge is a cumulative endeavor. These are components whose size are a fraction of the width of a human hair, packed together ever so tightly and even now built in three dimensions; they flirt with the laws of physics. Intel, by virtue of having been in the ahead for decades, still held the lead on developing the next generation of chips, using new equipment to print those chips, where the problem points in development were, and so forth.

These aren’t manufacturing processes that we’re traditionally accustomed to, taking component A and connecting it to component B, attaching that to part C, and so forth; a LEGO model of manufacturing if you will. No, this is far more complicated. Take ASML’s latest lithography machine as an example, designed to take a light source and print a highly specific pattern on a silicon wafer (Figure 3). It’s a vital piece of equipment in 2021.

Figure 3: ASML’s EUV Machine

The device takes a piece of molten tin, blasts it with a laser, bounces the light source dozens of times off mirrors at precise angles so that the light can be manipulated in such a way that it travels through a mask and onto a wafer in the most perfect manner in order to print a pattern. We’re talking about taking light, with a wavelength of ~200 nanometers, and splitting it into dimensions that are a fraction of that size in order to print a chip design. The most minute of errors derails the entire process and ruins the entire transistor, turning it into scrap. And that’s just a single step in a process that involves at least another half dozen stages of layering materials on and chipping them off so that a design can be printed. All so we can have the latest greatest chips for our computers and smartphones.

ASML’s EUV machine above costs ~$400 million for a single unit. It is so heavy that it has shaken the foundations of buildings. Shipping it involves taking it apart in six pieces and loading it on to three different Boeing 747s to its destination where it is reassembled. This is manufacturing of the highest order involving complicated physics, not component assembly. It shows how manufacturing in this industry is its own intellectual property because of the sheer technical difficulty.

The machine above is more of a 2021 lay of the land (EUV was not used before the current generation of chips) but the principle that this is not everyday type of equipment is the larger point and what should stand out. It has always taken a lot of learning and mastery of the equipment by the brightest of engineers to maximize output in an efficient manner and each successive generation gets more complicated; It’s not as simple as adding one new machine or one new step to the process. The process must be reinvented each time to get the desired improvements.

I make this point to emphasize that it is almost impossible to surpass a manufacturing leader by virtue of the complexity of the process. Closing the gap is possible with enough learning but attaining the top position requires the current leader to stumble. “Enough learning” being the operative phrase. The birth of the smartphone was a brand-new computing market that would see billions of devices come to existence, enough scale to allow manufacturers to continue to invest in new processes and new equipment just as costs were beginning to rise. And it was a market that Intel was letting them have.

Simultaneously, once rebuffed by Intel, Jobs and Apple moved to a different CPU design, one by ARM holdings. ARM, like TSMC, has its own unique business model. They offer blocks of IP for customers to make their own chips or put in their own devices in exchange for a license fee. The cost of an ARM chip is just a few dollars, not the hundreds of dollars per chip that you’d traditionally see from a designer. Most importantly, ARM’s specialty were chips designed for low power consumption. Intel’s on the other hand are concerned with one thing: maximum power. For a mobile device without a fan, like a phone or tablet, maximum power consumption is a massive detriment as it eats up battery life and risks overheating. ARM’s products didn’t pose such a risk. They may not have been the most powerful on the market, but alternatives were a non-starter. They also are customizable, something that Intel refused to budge on; they made chips they believed were the best, designed specifically for their own manufacturing process. Take it or leave it.

Apple used standard ARM chips for a few years before surprising the market with an announcement in 2010 that its iPhone 4 would feature a chip that Apple had designed internally with its own intellectual property. It was an unprecedented announcement, harkening back to the days of IBM doing everything in house. But Apple had developed the talent and resources internally that they could design a chip that was integrated with its own iOS software that would result in the best performance and user experience. When you’re selling premium products at a premium price, top-notch user experience is a necessity and Apple had determined that continuing that trajectory required its own chips. Integration between software and hardware had been Intel’s ace in the hole for decades; now it was Apples. By this point, Apple was selling close to 70 million phones worldwide but was on the way to over 200 million just five years later. That helped fund more R&D efforts into making their phones, and the chips inside them, better and better.

Apple hasn’t looked back since. Each successive design of its chips kept getting better and better and maximizing that performance meant always having access to the best technological roadmap. But that was beginning to become more and more difficult. Other dedicated foundries started having problems developing a process by which they could shrink chips; they either couldn’t get the process to work, lengthened their development roadmap, or the investment become uneconomical. For instance, Global Foundries (spun out of AMD as a contract manufacturer a la TSMC during the financial crisis…so much for real men) began having problems developing new process technology in the 2011-2013 time frame. They kept having to push out timelines and experienced higher costs. Longer timelines frustrated customers as it meant they would either have to redesign a chip for a new manufacturer (designs are not portable) or risk being late to market. By 2018, Global Foundries dropped out of the market of leading-edge chips, preferring to stick to older processes for customers that didn’t need the fastest and most intensive technology.

But they weren’t alone. At the end of 2013 Intel announced delays to its next flagship product. Patterning chips was getting harder they said. But management insisted it was nothing to worry about. It was just a 3 month delay, and after all, they had a 5 year lead on rival TSMC. Their top of the line CPU products at 14nm would be ready for the first quarter of 2014. Well, the first quarter turned into the first half of 2014. The first half of 2014 ended up being 2015, all while the company is supposed to simultaneously be developing 10nm process technology. But those plans got pushed back as well. While Intel was two years too late to shipping its 14nm products to customers in meaningful volume, the company ended up being six years late with its 10nm products.

The dam was breaking. The thread was unraveling. Being two years late is astounding, especially for an iconic manufacturer. Being six years late is an eternity in this industry; it shook up an entire ecosystem. Companies design products years in advance under the guise that Intel’s products would be ready when they said they would be. Intel’s own design teams had to adapt on the fly, finding new ways to squeeze out performance improvements out of old designs or develop new ways to connect processor cores together so that the whole package would be better off.

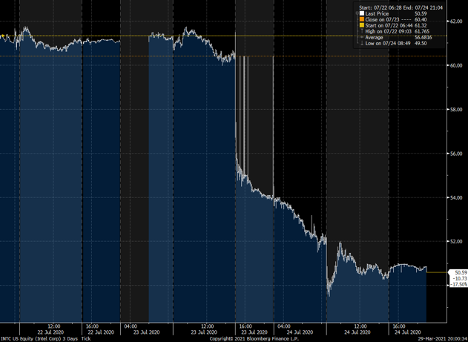

TSMC all the while continued making steady progress. They didn’t make gargantuan leaps, but Intel’s lead slipped slowly. Then, in the summer of 2020, it slipped dramatically. Once again, Intel pushed out its process, this time for 7nm. The stock reacted accordingly, down 18% on the news (Figure 4).

Figure 4: Intel’s Technological Advantages (and Market Value) Disappearing

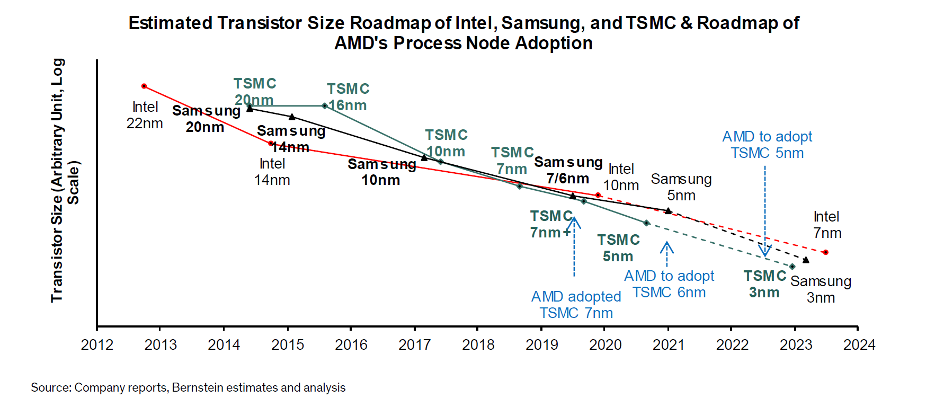

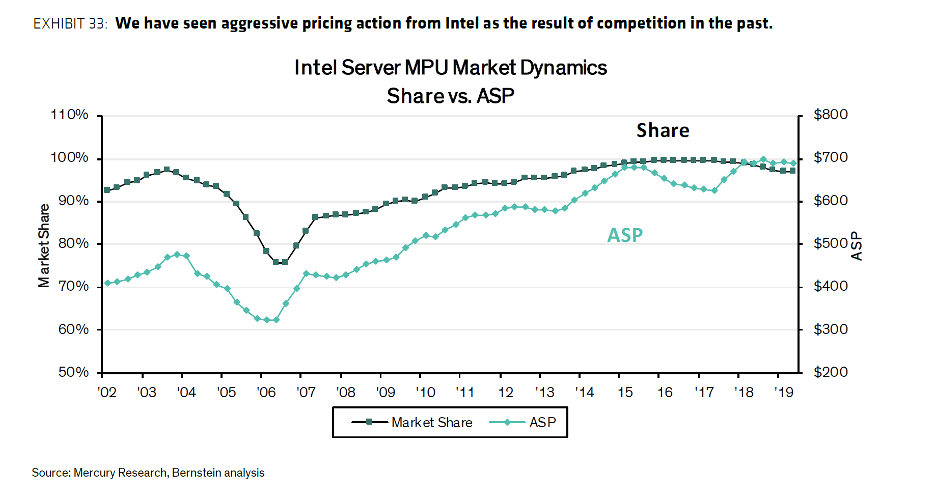

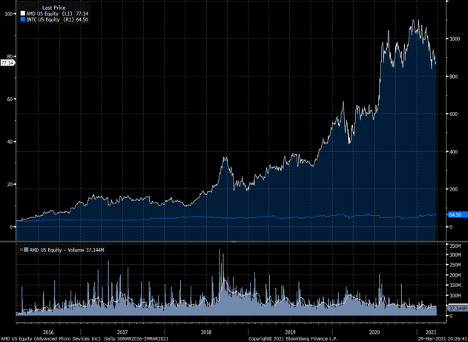

The impossible had happened. The gap that TSMC could never close on its own was now gone because Intel dropped the ball. The extended timeline meant that by 2023 TSMC would be a full generation ahead. In the span of six years what had taken decades to build up was now gone. Intel would now have to learn how to exist without a process technology advantage (Figure 5, note: Intel’s 7nm is approximately equivalent to TSMC’s 3nm). Given that their designs were always prepared with such an advantage, what would the future hold for such an iconic company? Instead, their chief rival for CPUs, AMD, would have a process advantage for the first time in its history with plenty of opportunity to take share since they relied on TSMC for production; Intel’s share in server’s is currently ~95%, down from 99% a few years ago. AMD’s stock has reacted accordingly to the opportunity (Figure 6).

Figure 5: TSMC Will Have a Full Year Advantage In Short Time, Bernstein

Figure 6: Intel’s Loss is AMD’s Gain in Servers…and in Share Price

How did Intel find itself in this position? No one knows for sure but putting together evidence from multiple sources suggests a combination of a few factors. For one, Intel had a history of not using top-tier equipment in its processes. This let them keep costs down (maintaining their superior margins) and they did so because they believed their design superiority could overcome those equipment challenges. Even when hints of troubles with 14nm came up the company didn’t adapt in successive generations. Second, the 10nm technology transition used brand new types of materials that complicated things. Third, Intel bit off more than it could chew at 10nm; instead of trying to roughly double the transistor density (usual progressions increased density 2.2-2.4x prior levels) the 10nm process attempted to increase the density by 2.7x, their biggest leap in history. Finally, there’s also been a bit of engineering talent to leave the company over the years.

So why does this all matter? Is this an overreaction and are we spending too much time fixating on one company?

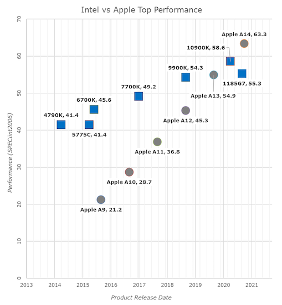

What I’ve come to understand and believe is that the paradigm of computing is changing, and will be different forever into the future. The computing and developer ecosystem have started paying attention to the fact that there are other ways to build chips, including different ecosystem’s outside of Intel’s x86. That’s the significance of Apple’s developments. Its latest chip, the first on its own laptops, has surpassed Intel’s in terms of performance and didn’t use any IP from Intel’s ecosystem; instead it relied on ARM IP developed internally (Figure 7).

Figure 7: Apple’s Own Chips Now Outperform Intel’s CPUs

Apple isn’t alone. Amazon has started designing its own chips based on ARM, carefully tailored to its own workloads in its servers. They’ve seen their costs fall 50% as a result. Companies have learned that graphics processors from Nvidia, originally built for gaming, are great at running machine learning models. Google has moved to developing its own chips for machine learning but also for processing images in a better way via software in phones. This is all part of the rise of heterogenous computing. Rather than depending on one ecosystem, one ISA (x86) to do every type of computing workload companies have learned to specialize individual component chips, tie them together on one silicon die and deliver improved performance. While CPUs weren’t progressing on the timelines we were used to, specializing a separate component for a specific task improved the overall performance. One chip could be used for processing images, another could be used for video, another for machine learning and all could sit on the same piece of silicon. It’s the classic case of specialization outperforming general solutions, the latter being represented in the industry by CPUs having to do everything.

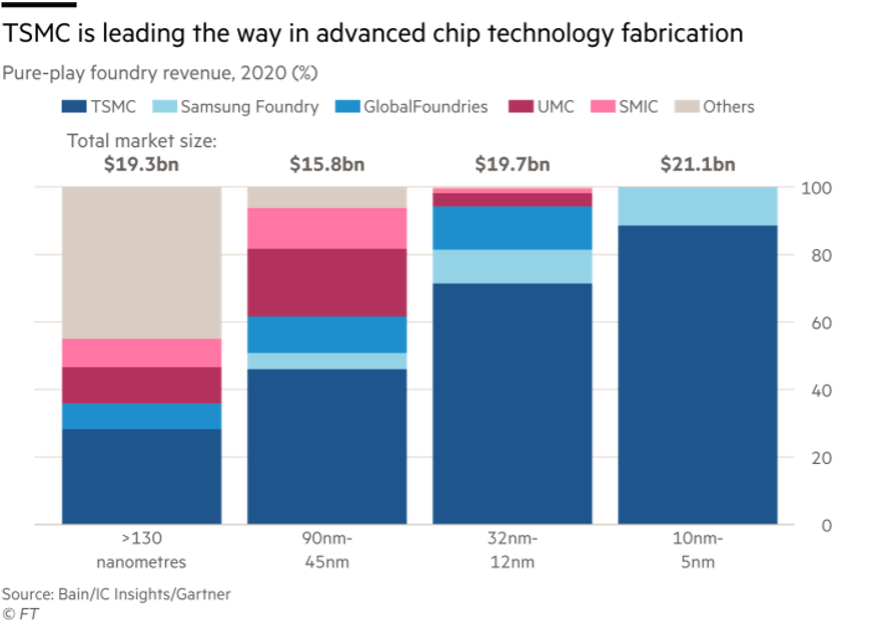

But this is all happening in a backdrop of diminishing production capacity globally, especially for chips that need the latest process to deliver gains. With companies exiting the market and Intel’s struggles, approximately 80% of next generation chips come from Taiwan (Figure 8). 50% of automotive microcontrollers come from TSMC alone. When auto OEMs cut production in 2020, they ceded their position in line at essentially the sole manufacturer and haven’t been able to get back in line since. Hence why so many car companies have cut production this year. It’s why 2021 has been the year of the chip shortage. We have a greater need for chips than ever before because the barriers for design have come down while the options for manufacturing are scarcer than ever. The market is being squeezed by two sides; demand surges are colliding with supply constraints.

Figure 8: The Options to Get A Leading-Edge Chip Built Have Dwindled

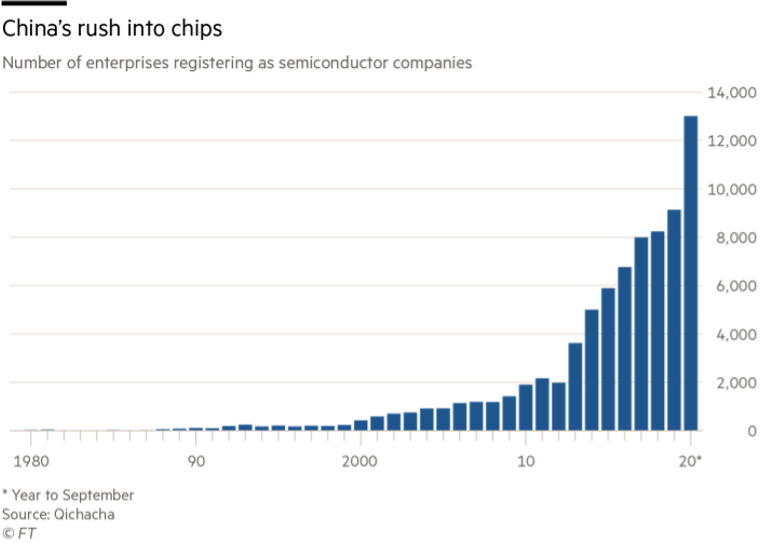

But that’s not even the greatest fear on investor’s or analyst’s minds. Rather, it’s the enormous push China is making for technological independence and to what extent they may be willing to go to (Figure 9). Namely, if China has its own existential rationale and ambition for “reuniting” Taiwan into its orbit what does that mean for the structure of the global economy as we know it? If a six month push out of orders is decimating the auto supply chain now, what would a scenario of not having access to Taiwan at all mean? Furthermore, it’s one thing to not have the latest chips for smartphones, cars or computers, but what about nuclear plants, military equipment, or medical devices?

Figure 9: China’s Quest for Its Own Semiconductor Supply System

This is why Intel’s stumble after stumble over the last decade is so monumental. We’re at the precipice of a paradigm change in computing and Intel will not have the lead. In fact, it will be trailing. What comes next is uncertain, including the geopolitical backdrop.

To its credit, Intel finally seems to be changing course and doing so dramatically thanks to new leadership. But their work is cut out for them. The company still has some extraordinary IP and top-notch engineering talent. The vigor of the new CEO is highly encouraging. But the new strategy is still a bit unclear and the company aims to be trying to do everything and be all things for all people. Its changes won’t even begin to be felt until 2023. Meanwhile, TSMC continues on.

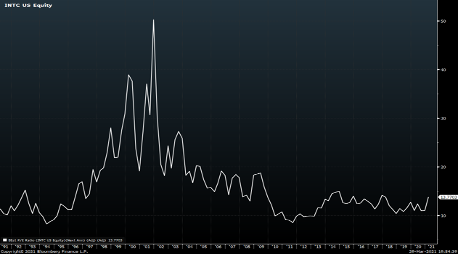

Tying this back to the introductory portion of this post, take a look at Figure 10, a graph of what PE ratio Intel has traded at for the last 30 years.

Figure 10: Intel’s Past and Current Earnings Multiple

Intel’s multiple history is noteworthy. In the run up to the Tech Bubble it traded at premium levels, to competitors and the market as a whole. Even after that bubble burst it still maintained its premium valuation. But after the Financial Crisis it didn’t recover anything near the level of the prior two cycles. Today, with the market at around 19x forward earnings Intel sits at 13x, an enormous discount. For years this confounded investors. Intel always looked cheap. It always looked like a value. It made no sense for a company with 99% share in servers in the midst of the cloud computing boom and with incredible IP to trade at a discount. What was going on?

In my opinion, investors weren’t thinking in the context of the terminal value of the business. While they were fixated on what the multiple was today or next year, the market was worried about forever. Not that Intel wouldn’t exist, but that the means of protecting its superiority was fading. The moat around the castle had been crossed. It was discounting that it would turn into just another company. The risk wasn’t that earnings would be lower in the next year or two. The risk the market was sniffing out was that the means of generating those earnings was damaged and the consequence would be either a “terminally” impaired ability to put up superior returns or pay a higher price to get them. In either scenario, that means less future cash for shareholders.

The first step to contemplating an investment should be to think with the terminal value in mind, that is, to think about the essential nature of the business and its level of. Worrying about earnings over the next year or two is futile compared to terminal value. Quantification, whether earnings or multiples, has the tendency to provide an illusion of certainty. It’s like worrying about running out of food aboard the Titanic; you’ve got bigger problems ahead of you. It’s why business analysis should always trump security analysis.