When Moore Isn’t Enough: Part I

When Moore Isn’t Enough: Part I

-

Author : Joel Charalambakis

Date : February 23, 2021

It seems like every day there’s a new story discussing the global shortage in semiconductors/chips, affecting everything from smartphones, to computers, to data centers and medical devices (examples here, here, here, and here). Perhaps no industry better serves as the poster child of the current predicament than the automotive industry. The industry is notoriously capital intensive and competitive with long supply chains and thin margins. Manufacturers frequently hold orders of one component if a complementary product is not available in order to preserve capital. Given the rising digital intensity of vehicles, there are now more points of vulnerability compared to years past. Porche’s CEO warned that the current imbalance could derail operations for months while Ford and Volkswagen estimated production cuts of 20% and 100,000 cars respectively in the first quarter of this year. Nissan, Honda, and GM joined them, all because of the inability to access supply.

I won’t dive into the weeds of the automotive supply chain but the fact that the automotive industry only makes up 10% of the semiconductor market, per McKinsey, suggests that the inability to serve a relatively small portion of the market is part of a bigger backdrop of complex, intertwined issues in the semiconductor space. To put it frankly, there is and has been growing evidence for years that we’re at another inflection point in computing but where it goes and how fast it gets there is anybody’s guess. To people in the industries of chips and computing the changes represent new paradigms that energize the imagination. But to everyday users they’re hardly noticeable.

As such, I thought the best way to do it was through an overview of how we got to the industry state of today. In laying out this piece, I realized doing it justice would require more than one part. To start, we need to review some history and reviewing the history of chips tends to be dominated by one company: Intel.

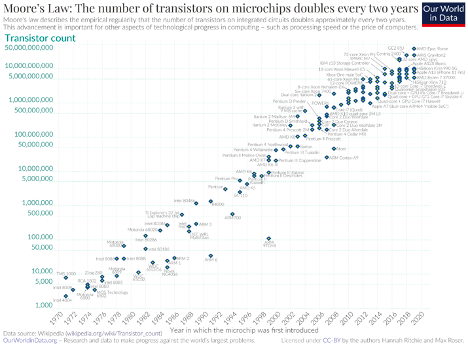

In 1965 Gordon Moore, who would go on to be a co-founder of Intel, projected that the number of transistors (the building block of chips) that would fit on a chip would double every year. He later revised it to every two years. But it was less of an actual law of physics and more just a standard to be pursued. One that the whole industry made good on (Figure 1).

Figure 1: Moore’s Law

Moore’s Law however actually has 3 components. It’s best to think of them as legs of a stool. A deterioration in one sets the whole thing out of balance. In addition to the doubling of the number of transistors on a circuit, chips would consume half as much energy with each transition, and, crucially, cost half as much by taking advantage of economies of scale (the marginal cost of producing another chip – that is half the size of the prior generation – falls tremendously once manufacturing operations are up and running).

The tech titan of the era when Moore made his prognostication was IBM. Standard fare in that day and age saw tech companies completely vertically integrated. This meant that not only did IBM have its own hardware solutions, it also designed its own chips, developed its own software to execute instructions on those chips, user applications, and of course the servicing of all those solutions. Buyers of IBM got the benefit of working with a single vendor, but innovation was at the mercy of the slowest moving piece in the stack, because they all were integrated together and made to work with each other, a theme we’ll repeat often.

By the late 70s and early 80s, personal computers started to appear on the market, albeit it with much less power than what IBM traditionally sold and a market far too small for Big Blue to worry about. But when employees started bringing these devices into the workplace creating headaches for IT departments everywhere, corporate managers did what they would naturally do at the time: they went to IBM since that’s where they got the majority, if not all, of their technology solutions. IBM, in an effort to prioritize speed and appease customers, promised a solution within 12 months. This wasn’t enough time for IBM to churn something out and so they utilized third-party components. The components they settled on happened to be an Intel processor and operating system called MS-DOS from a little firm in Washington called Microsoft.

There’s a saying in Silicon Valley that innovation is in competition to gain distribution before distribution can gain innovation. Well, Microsoft and Intel, by virtue of being plugged into that era’s largest distribution channel (IBM) had their riches born. Both companies saw enormous benefit as their solutions became the standard for the PC era.

To add some more detail in the case of Intel, the selection of the company’s processors also meant an endorsement of their code. Specifically, Intel’s x86 Instruction Set Architecture (ISA) was now the default standard in the personal computing industry and the one integrated with Microsoft’s operating system. To put it in simple terms, an ISA is software/code used strictly by the CPU; it isn’t something users ever interact with. Its purpose is to dictate to the CPU how incoming instructions are to be broken down, interpreted, and processed. The key is that with Intel’s x86 and Microsoft the industry standard, software developers had every incentive to optimize their applications to work with the two as opposed to competing standards of the day. Even competing designers of CPUs, like AMD, built their designs with the x86 ISA.

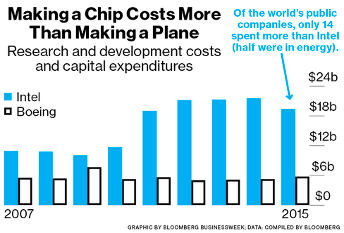

It was this essential capturing of talent that propelled both Microsoft and Intel to get dominant share in their respective markets and capture most of the computing industry’s profits. Intel used those profits and plowed them back into the business in the form of Research & Development as well as innovative manufacturing processes to make sure that Moore’s Law continued its destiny (Figure 2) and Intel’s chips were best of breed. It developed the best compilers, frameworks, and other necessary software tools so that the design of any chip was maximized with an Intel CPU.

Figure 2, Intel’s R&D and CapEx in Perspective, Bloomberg

What this practically means is that by virtue of this integrated optimization, software tends to generally run faster on Intel machines. As long as that was the case, software developers had no reason to switch. The fact that the developer ecosystem was entrenched for decades meant that any new solution couldn’t just be better than what Intel put out. It had to be better by several orders of magnitude in order to justify software to be rewritten. Essentially, this ownership and intimate integration of x86 into its designs gave Intel its margin of safety. And not only did it have to be orders of magnitude better, you had to be able to promise customers ample supply and these customers were used to being served by the enormous manufacturing scale and leading technology offered by Intel’s fabs. It’s a high hurdle for any competitor to overcome. That’s how Intel, with high unit profitability, a massive reinvestment opportunity, incredible IP, and astute management doubled the performance of the S&P 500 over a 20-year period (Figure 3), even though the stock lost over 60% of its value in the Tech bubble.

Figure 3: Intel (White, 16% CAGR) vs the S&P 500 (Orange, 8% CAGR)

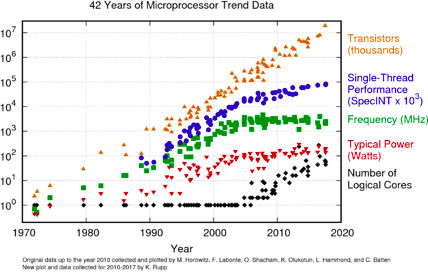

Around 15 years ago, we got an explosion in computing devices that we all interact with as the continued scaling of integrated circuits allowed for computers to fit into our pockets in the form of smartphones. Under the surface there were interesting developments occurring at the same time (Figure 4): Moore’s Law started to die.

In blue below you’ll notice the slope of the line measuring a single CPU core mimics the slope in Figure 1 until the late 2000s. This is Moore’s Law as most people know it: a doubling in performance every two years. Around the same time devices like the smartphone or tablets exploded, the performance of a single microprocessor started to meaningfully slow down, running up against the limits of physics. So how did we get the improved performance demanded by the market and demonstrated in Figure 1? We added more cores/processors (the black line) to a piece of silicon to tackle growing workloads and split the work over multiple chips. That’s largely how the semiconductor industry has been able to add more transistors onto a chip and increase performance.

Figure 4: Moore’s Law Has Broken Down for Over a Decade, Mule’s Musing

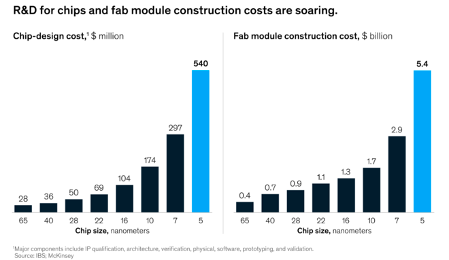

But it has come at a cost, literally (Figure 5).

Figure 5: The Costs to Design a Leading Chip Have Skyrocketed

One of the key legs of Moore’s Law was its economical evolution with each engineering transition but those have started to be undone in recent years. Just consider that chips of the 10-nanometer size were on the bleeding edge of innovation in late 2018/early 2019 (note: the size notations used in industry parlance are more marketing material than anything and not literal designations on dimensionality). By this time next year, the leading-edge chip will cost 3x as much to design and 3x to build the necessary infrastructure for manufacturing them. If there’s ever a force to incentivize innovation in a new way of doing things, I would guess that exponentially rising costs is one of them.

Around the same time of peak single-core CPU power consumers were greeted with the iPhone, the device that broke the dam on the smartphone market and made computing a continuous experience. Just before it’s introduction in 2007 Apple had approached Intel with using the latter’s x86 chips in its laptop computers. At the same time, Steve Jobs inquired about a little-known processor Intel owned the rights to produce via a merger but that wasn’t an x86 chip, the XScale ARM processor. He was interested in using the chip in the soon to come iPhone and manufacturing it in Intel’s fabs.

Intel said thanks, but no thanks.

“At the end of the day, there was a chip that they were interested in that they wanted to pay a certain price for and not a nickel more and that price was below our forecasted cost. I couldn’t see it. It wasn’t one of these things you can make up on volume. And in hindsight, the forecasted cost was wrong and the volume was 100x what anyone thought.” Intel CEO Paul Otellini, May 2013

I bring up the example for two reasons. First, to demonstrate Intel’s devotion to its x86 products and processes, both extremely lucrative with excellent and dominant market presence. The decision to pass on Apple is defensible: why take away resources and capacity from a sure thing (x86) to bet on something with no clarity on long-term volumes. Second, because Apple’s use of an ISA that deviated from the x86 ecosystem was a seminal moment, albeit a very logical one for reasons I’ll explain. It opened the floodgates on creating viable alternatives to chip designs and structure than anything we’ve ever seen.

I’ll explain more in the upcoming part(s).

Sources

- https://stratechery.com/2016/disrupting-basketball-thiel-gawker-follow-up-intel-and-arm/

- https://stratechery.com/2018/intel-and-the-danger-of-integration/

- http://www.bloomberg.com/news/articles/2016-06-09/how-intel-makes-a-chip

- https://medium.com/@gavin_baker/implications-of-intel-losing-its-manufacturing-lead-amd-xilinx-nvidia-and-qualcomm-40dee6278369

- https://medium.com/swlh/what-does-risc-and-cisc-mean-in-2020-7b4d42c9a9de

- https://stratechery.com/2020/apple-arm-and-intel/?utm_source=Memberful&utm_campaign=28520df81a-weekly_article_2020_06_16&utm_medium=email&utm_term=0_d4c7fece27-28520df81a-111096969

- https://medium.com/@gavin_baker/investing-mistakes-chapter-1001-9f0b7dbc6637

- https://stratechery.com/2016/andy-grove-and-the-iphone-se/