The Wooden Nickel is a collection of roughly a handful of recent topics that have caught our attention. Here you’ll find current, open-ended thoughts. We wish to use this piece as a way to think out loud in public rather than formal proclamations or projections.

Eight days ago, the markets experienced one of those hard sell-offs, with a defined catalyst, that will stand out and be remembered by those who pay close attention for years to come, much like the Vix-pocalypse of 2018, the Covid crash of 2020 or the Silicon Valley Bank fiasco of 2023. With the S&P 500 at peak concentration risk, and levered specifically to technology and artificial intelligence (AI) themes, the release of AI models and chatbots from China’s DeepSeek investors sold furiously, including the biggest loss in market value for a single stock in history with Nvidia’s nearly $600 billion decline.

1. How Does Deepseek Compare?

DeepSeek’s R1 is undoubtedly an impressive model. To anyone who does not follow the intricacies of all the AI models and labs, using R1 would feel on par with some of the more well-known products that have been around for much longer, like OpenAI’s prior generation of models or current offerings from firms like Google and Anthropic. Each has its strengths and weaknesses but between official benchmarks (which are not perfect measures) and anecdotal testimonials, it’s clear from an absolute perspective that R1 is performatively competitive. Further, among the world’s open models (meaning other people and firms can co-opt and innovate on top of what DeepSeek has built, just as Microsoft already has) it is certainly the best.

The open nature of R1 is a critical element; it allowed DeepSeek (and others) to piggyback off of the work done by others who shoulder the majority of the capital costs, Meta in this case (though there are indications that DeepSeek utilized OpenAI’s own models to train R1). Rather than reinvent everything themselves, DeepSeek could iterate and experiment. Further, it helps take innovations and diffuse them more quickly and more exactly than say the private nature of OpenAI or other labs. For an industry from the ground up (and for something that is viewed as being a strategic asset), diffusion to as many participants as quickly as possible is crucial.

The most publicized portion of the DeepSeek drama has been the alleged cost of just over $5 million to train its system compared to the ~$100 million that GPT 4 cost last year. There’s a lot to unpack with the publicized $5 million dollar training cost which is at best misleading; so many costs were exempted to come to the $5 million figure that Enron’s accountants would be proud of. Upon further review, the $5 million can be best thought of as the marginal cost of the final training run after lots of experimentation, capital investment, R&D costs, and certain salaries. But to get to that point where the marginal cost could be $5 million, it is very likely that hundreds of millions if not billions of dollars have been spent. Further, technology operates on declining cost curves, and what we’ve seen in AI is a very rapid decline in the cost to serve (more in a later section). As a result, comparing a system built today with one built over a year ago is not exactly an apples-to-apples comparison. They exist in different economic contexts.

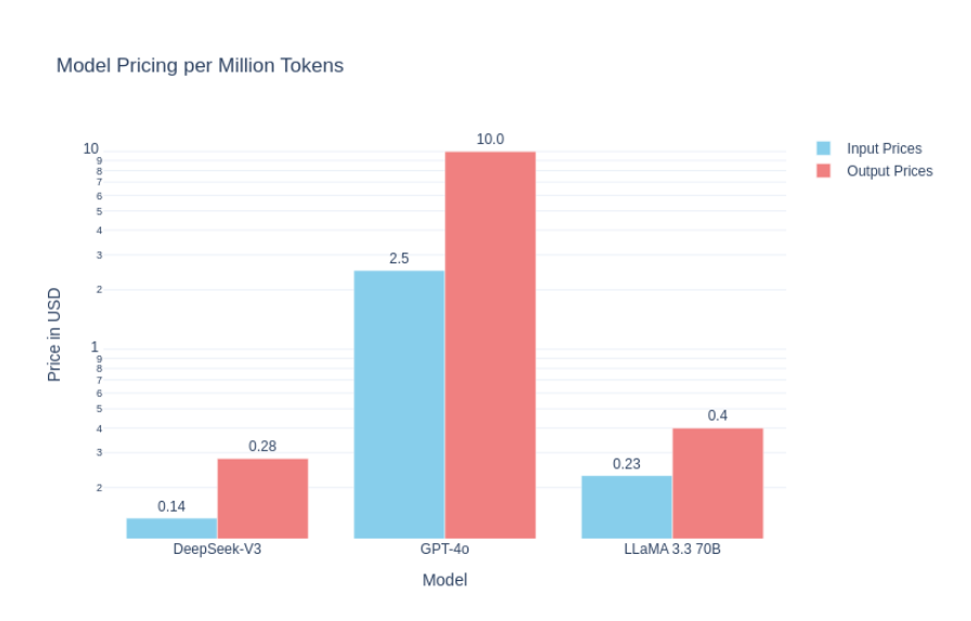

The more important cost figure to consider is DeepSeek’s inherent cost to serve and execute AI workloads for users. It’s here where the team’s ingenuity shows up and it is very impressive. A technical explanation that highlights some of the key innovations can be found here but suffice it to say that the design of DeepSeek’s model impacts not just its performance but crucially its cost structure.

Figure 1: Sample Inference Price Comparison

2. What Does it Mean for AI Spending?

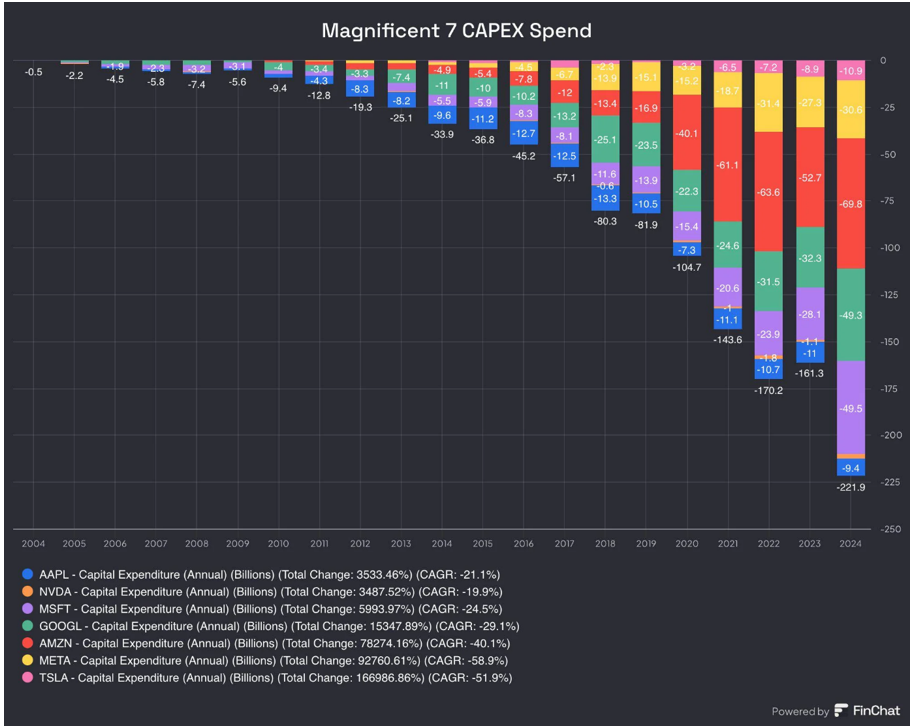

Just a few days prior to the DeepSeek selloff, OpenAI and Oracle announced “Stargate”, an eye-opening plan to spend $100 billion on AI data centers and potentially up to $500 billion, all in the pursuit of superintelligence, creating machines that can complete tasks in a manner more superior to humans. This is all on top of the massive capital expenditure programs put in place by the major tech companies and easily rival the biggest capital projects in human history, even adjusted for inflation.

Figure 2: Magnificent 7 Cap Ex

But if equivalent systems can be developed for a fraction of the cost (even if the publicized figures are misleading), then why do we need these massive spending programs? That’s what investors asked as they were shooting first and asking questions later last Monday, sending Nvidia down 17%, Oracle down 14%, Broadcom down 17%, and other dramatic moves.

It may be common sense and it may even be rational, but I would bet that is not how these CEOs are thinking about the current paradigm; we stated so two months ago. If anything, I would highly bet that the major tech CEOs of the world viewed the development of DeepSeek in one of two ways: as a Sputnik moment that accelerates the ongoing race or with relief. Specifically, relief that we have found a way to utilize AI in such a cheap manner because now it will free up capital for bigger and bigger models and systems. In either scenario, it’s a sign to push forward.

The latter would certainly be far more consistent with the 60+ years of computing history that we’ve seen. Technology has never stopped at good enough. The cost of computing power fell by 100% every year or two for 50 years thanks to Moore’s Law and nowhere along the way did the ecosystem stop and say, “We have enough computing needs for all the use cases we’ll ever need.” The industry simply doesn’t function in that manner. Instead, new use cases are developed as costs fall.

Just look at the last 18 months. The inference costs of AI have fallen by 1000x but the ecosystem is not slowing down in the slightest. But the cost declines are the point. Human history has never stopped at saying “We have enough intelligence.” And it won’t stop now.

Figure 3: GPT4 Costs have fallen by 1,000x in 18 months

There may be changes in what the spending gets composed of and what it prioritizes (speed/latency vs raw power per se), but I don’t think we’re on the edge of a cliff in the fundamental spending. What stocks have discounted in them is another matter entirely.

3. Are the Export Controls a Failure?

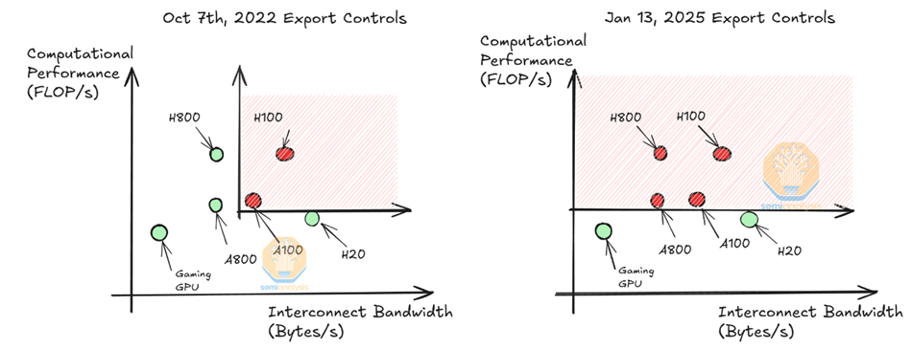

A natural question to ask considering DeepSeek’s popularity and very real innovations is whether the multiple rounds of export controls implemented by the US Commerce Department have been a failure. As a refresher, the Biden administration imposed controls on semiconductors that can be shipped to China based on performant characteristics including raw computing power and data transfer rates. The rules initially published in October 2022 (before ChatGPT was launched) were updated in October 2023 and January 2025 with each round of controls becoming more restrictive and more comprehensive.

Advocates of the controls promoted them as drawing a line of demarcation; from those points onward the gap in capacity and ability between China and the West would expand. Skeptics believed that these controls would simply incentivize domestic innovation in China, encouraging them to find ways to make their own chips and closing off a point of leverage that the US holds.

So, if DeepSeek, a company most people had never heard of even a month ago, can come out of nowhere to match or exceed leading AI labs by certain measures then doesn’t that mean these controls have been all for naught?

It’s an understandable question at first blush but, by and large, it doesn’t hold up to scrutiny. For one, like any major policy, its effect does not get felt immediately but with a lag. While servers are depreciable assets, they don’t get replaced each year but rather over roughly 4 years. As time passes, the chips currently secured will continue to fall behind the most current iteration affection model development and performance.

Second, most (but not all) chips secured by DeepSeek were legally available for purchase until October 2023 and were only further tightened about a month ago. Yes, some of the most performant chips were smuggled but that’s precisely the point. Controls will not be able to reduce the amount sold to China to zero; that is a completely unrealistic scenario. But it will be instrumental in scaling up millions of chips into a full-fledged ecosystem.

Figure 4: Export Controls Updated in January, Source: SemiAnalysis

Third, and related, it is highly likely, if not guaranteed, that without even the current controls in place what would have been released by DeepSeek would have been much more powerful and able than what we see today. In an interview to a Chinese technology publication last year, DeepSeek’s own CEO stated their biggest hurdle to developing more powerful AI systems was the export controls. In other words, we haven’t fully seen just how innovative a company (let alone a whole country) can be with tight restrictions.

4. Recommended Reads and Listens